Modeling and Improving Text Stability in Live Captions

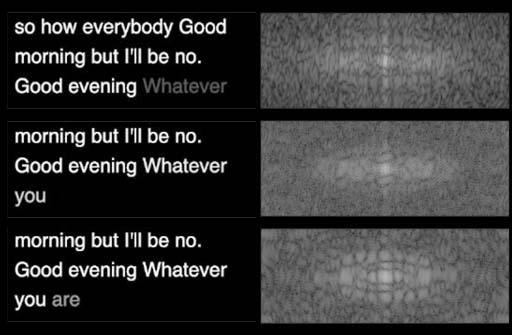

In recent years, live captions have gained significant popularity through its availability in remote video conferences, mobile applications, and the web. Unlike preprocessed subtitles, live captions require real-time responsiveness by showing interim speech-to-text results. As the prediction confidence changes, the captions may update, leading to visual instability that interferes with the user’s viewing experience. In this work, we characterize the stability of live captions by proposing a vision-based flickering metric using luminance contrast and Discrete Fourier Transform. Additionally, we assess the effect of unstable captions on the viewer through task load index surveys. Our analysis reveals significant correlations between the viewer's experience and our proposed quantitative metric. To enhance the stability of live captions without compromising responsiveness, we propose the use of tokenized alignment, word updates with semantic similarity, and smooth animation. Results from a crowdsourced study (N=123), comparing four strategies, indicate that our stabilization algorithms lead to a significant reduction in viewer distraction and fatigue, while increasing viewers' reading comfort.