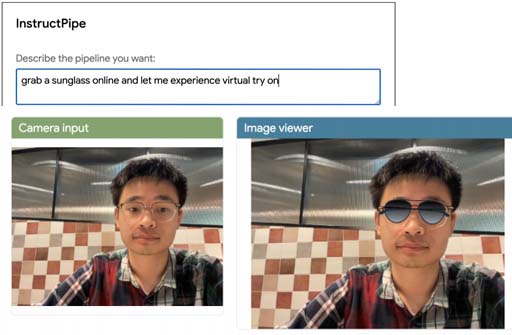

InstructPipe: Building Visual Programming Pipelines in Visual Blocks with Human Instructions Using LLMs

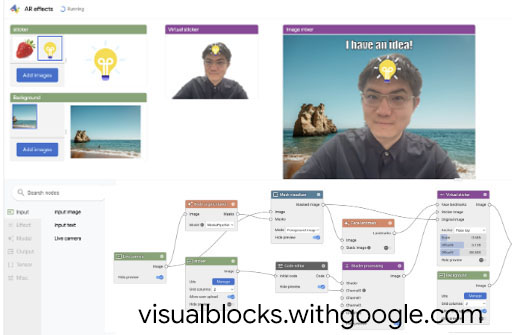

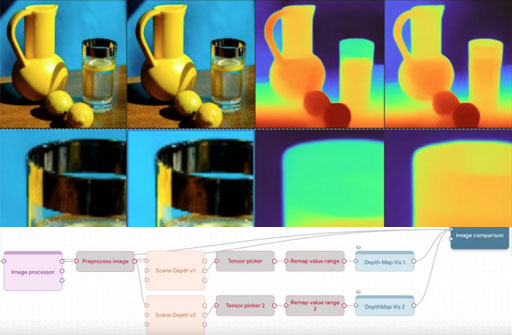

Visual Blocks: Visual Prototyping of AI Pipelines

The project Rapsai, a.k.a. Visual Blocks for ML, aims to make the prototyping of machine learning (ML) based multimedia applications more efficient and accessible. In recent years, there has been a proliferation of multimedia applications that leverage machine learning (ML) for interactive experiences. Prototyping ML-based applications is, however, still challenging, given complex workflows that are not ideal for design and experimentation. To better understand these challenges, we conducted a formative study with seven ML practitioners to gather insights about common ML evaluation workflows. \n\nThe study helped us derive six design goals, which informed Rapsai, a visual programming platform for rapid and iterative development of end-to-end ML-based multimedia applications. Rapsai features a node-graph editor to facilitate interactive characterization and visualization of ML model performance. Rapsai streamlines end-to-end prototyping with interactive data augmentation and model comparison capabilities in its no-coding environment. Our evaluation of Rapsai in four real-world case studies (N=15) suggests that practitioners can accelerate their workflow, make more informed decisions, analyze strengths and weaknesses, and holistically evaluate model behavior with real-world input. Try our live demo at Visual Blocks for ML and [let us know if you find it useful in your classes or project!

Publications

InstructPipe: Building Visual Programming Pipelines in Visual Blocks with Human Instructions Using LLMs🎖️ Honorable Mentions Award

Experiencing InstructPipe: Building Multi-modal AI Pipelines Via Prompting LLMs and Visual Programming

Rapsai: Accelerating Machine Learning Prototyping of Multimedia Applications Through Visual Programming🎖️ Honorable Mentions Award, 170K+ views

Experiencing Visual Blocks for ML: Visual Prototyping of AI Pipelines

Experiencing Rapid Prototyping of Machine Learning Based Multimedia Applications in Rapsai

Videos

Rapsai: Accelerating Machine Learning Prototyping of Multimedia Applications Through Visual Programming

Preview of Rapsai

Visual Blocks: Ridiculously rapid ML/AI prototyping and deployment to production

How to create effects with models and shaders using visualblocks

How to compare models from web using visualblocks

How to use models and build pipelines in Colab with visualblock

Talks

Interactive Perception & Graphics for a Universally Accessible XR

Ruofei Du

CVPR 2025 , Nashville, TN, USA.

pdf |

Visual Blocks for ML: Visual Prototyping of AI Pipelines

Ruofei Du

ECE188 , UCLA Remote Lecture.

Networking and System Challenges: Interactive Perception & Graphics for a Universally Accessible XR

Ruofei Du

NSF ImmerCon 2025 , George Mason University, Fairfax, VA.

pdf |

Computational Interaction for a Universally Accessible Metaverse

Ruofei Du

Invited Talk at KAIST by Prof. Sang Ho Yoon , Remote Talk.

pdf |

Computational Interaction for a Universally Accessible Metaverse

Ruofei Du

Invited Talk at University of Minnesota by Prof. Zhu-Tian Chen, Minneapolis, MN, USA.

pdf |

Experiencing InstructPipe: Building Multi-modal AI Pipelines via Prompting LLMs and Visual Programming

CHI 2024, Hawaii, USA.

Visual Blocks for ML: Visual Prototyping of AI Pipelines

Ruofei Du

CS139: Human-Centered AI @ Stanford , Stanford, Palo Alto.

Interactive Perception & Graphics for a Universally Accessible Metaverse

Ruofei Du

University of Virginia CS Fall 2023 Distinguished Speakers, Charlottesville, Virginia, USA.

Rapsai: Accelerating Machine Learning Prototyping of Multimedia Applications through Visual Programming

Ruofei Du

CHI 2023, Hamburg, Germany.

pdf | talk (onsite), video, video (short) | gSlides | cite

Interactive Perception & Graphics for a Universally Accessible Metaverse

Ruofei Du

Invited Talk at UCLA by Prof. Yang Zhang , Remote Talk.

Interactive Graphics for a Universally Accessible Metaverse

Ruofei Du

Invited Talk at ECL Seminar Series by Dr. Alaeddin Nassani , Remote Talk.

Interactive Graphics for a Universally Accessible Metaverse

Ruofei Du

Invited Talk at UICC 2023 sponsored by University of Iowa ACM Student Chapter, Iowa City, Iowa.

Interactive Graphics for a Universally Accessible Metaverse

Ruofei Du

Invited Talk at Empathic Computing Lab , Remote Talk.

pdf |