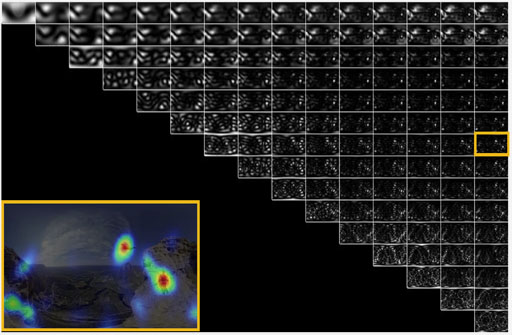

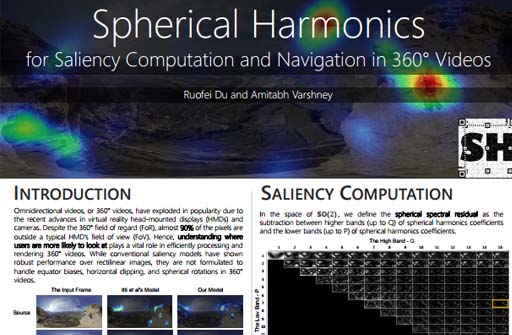

Omnidirectional videos, or 360° videos, have exploded in popularity due to the recent advances in virtual reality head-mounted displays (HMDs) and cameras. Despite the 360° field of regard (FoR), almost 90% of the pixels are outside a typical HMD's field of view (FoV). Hence, understanding where users are more likely to look at plays a vital role in efficiently streaming and rendering 360° videos. While conventional saliency models have shown robust performance over rectilinear images, they are not formulated to handle equatorial bias, horizontal clipping, and spherical rotations in 360° videos. In this paper, we present a novel GPU-driven pipeline for saliency computation and virtual cinematography in 360° videos using spherical harmonics (SH). By analyzing the spherical harmonics spectrum of the 360° video, we extract the spectral residual by accumulating the SH coefficients between a low band and a high band. Our model outperforms the classic Itti et al.'s model in timings by 5\times to 13\times in timing and runs at over 60 FPS for 4K videos. Further, our interactive computation of spherical saliency can be used for saliency-guided virtual cinematography in 360° videos. We formulate a spatiotemporal model to ensure large saliency coverage while reducing the camera movement jitter. Our pipeline can be used in processing, navigating, and streaming 360° videos in real time.

VIDEO

Publications 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), 2022.

Keywords: 360 image, virtual reality, view synthesis, panorama, neural rendering, depth map, mesh rendering, inpainting, digital world

IEEE Transactions on Visualization and Computer Graphics (TVCG), 2021.

Keywords: 360° video, foveation, virtual reality, live video stream-ing, log-rectilinear, summed-area table, eye tracking, digital world

IEEE Computer Graphics and Applications (CGA), 2021.

Keywords: spherical harmonics, virtual reality, visual saliency, 360°videos, omnidirectional videos, perception, Itti model, spectralresidual, GPGPU, CUDA, eye tracking, interactive graphics

Ruofei Du

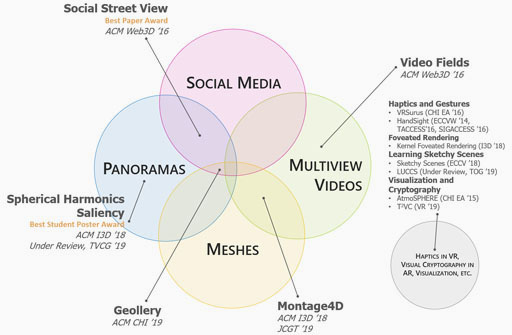

Ph.D. Dissertation, University of Maryland, College Park., 2018.

Keywords: social street view, geollery, spherical harmonics, 360 video, multiview video, montage4d, haptics, cryptography, metaverse, mirrored world

ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games (I3D), 2018.

Keywords: spherical harmonics, virtual reality, visual saliency, 360°videos, omnidirectional videos, perception, Itti model, spectralresidual, GPGPU, CUDA

Cited By Rectangular Mapping-based Foveated Rendering The IEEE Conference on Virtual Reality and 3D User Interfaces 2022 (IEEE VR). Jiannan Ye , Anqi Xie , Susmjia Jabbireddy , Yunchuan Li , Xubo Yang , and Xiaoxu Meng . source | cite | search An Integrative View of Foveated Rendering 0. Bipul Mohanto , ABM Tariqul Islam , Enrico Gobbetti , and Oliver Staadt . source | cite | search Dynamic Viewport Selection-Based Prioritized Bitrate Adaptation for Tile-Based 360\textdegree Video Streaming IEEE Access. Abid Yaqoob , Mohammed Amine Togou , and Gabriel-Miro Muntean . source | cite | search Application of Eye-tracking Systems Integrated Into Immersive Virtual Reality and Possible Transfer to the Sports Sector - a Systematic Review Multimedia Tools and Applications. Stefan Pastel , Josua Marlok , Nicole Bandow , and Kerstin Witte . source | cite | search Learning Based Versus Heuristic Based: A Comparative Analysis of Visual Saliency Prediction in Immersive Virtual Reality Computer Animation and Virtual Worlds. Mehmet Bahadir Askin and Ufuk Celikcan . source | cite | search Progressive Multi-scale Light Field Networks arXiv.2208.06710. David Li and Amitabh Varshney . source | cite | search Wavelet-Based Fast Decoding of 360° Videos arXiv.2208.10859. Colin Groth , Sascha Fricke , Susana Castillo , and Marcus Magnor . source | cite | search Bullet Comments for 360\textdegreeVideo 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR). Yi-Jun Li , Jinchuan Shi , Fang-Lue Zhang , and Miao Wang . source | cite | search Rega-Net:Retina Gabor Attention for Deep Convolutional Neural Networks arXiv.2211.12698. Chun Bao , Jie Cao , Yaqian Ning , Yang Cheng , and Qun Hao . source | cite | search Foveated Rendering: A State-of-the-Art Survey arXiv.2211.07969. Lili Wang , Xuehuai Shi , and Yi Liu . source | cite | search Masked360: Enabling Robust 360-degree Video Streaming with Ultra Low Bandwidth Consumption IEEE Transactions on Visualization and Computer Graphics. Zhenxiao Luo , Baili Chai , Zelong Wang , Miao Hu , and Di Wu . source | cite | search Eye-tracked Virtual Reality: A Comprehensive Survey on Methods and Privacy Challenges arXiv.2305.14080. Efe Bozkir , Süleyman Özdel , Mengdi Wang , Brendan David-John , Hong Gao , Kevin Butler , Eakta Jain , and Enkelejda Kasneci . source | cite | search Tiled Multiplane Images for Practical 3D Photography arXiv.2309.14291. Numair Khan , Douglas Lanman , and Lei Xiao . source | cite | search Quality-Constrained Encoding Optimization for Omnidirectional Video Streaming IEEE Transactions on Circuits and Systems for Video Technology. Chaofan He , Roberto G. de A. Azevedo , Jiacheng Chen , Shuyuan Zhu , Bing Zeng , and Pascal Frossard . source | cite | search Providing Privacy for Eye-Tracking Data with Applications in XR University of Florida. Brendan David-John . source | cite | search Locomotion-aware Foveated Rendering 2023 IEEE Conference Virtual Reality and 3D User Interfaces (VR). Xuehuai Shi , Lili Wang , Jian Wu , Wei Ke , and Chan-Tong Lam . source | cite | search Eye-tracked Evaluation of Subtitles in Immersive VR \textdollar360\^$\lbrace$\textbackslashcirc$\rbrace$\textdollar Video 2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW). Marta Brescia-Zapata , Krzysztof Krejtz , Andrew T. Duchowski , Christopher J. Hughes , and Pilar Orero . source | cite | search PanoGRF: Generalizable Spherical Radiance Fields for Wide-baseline Panoramas arXiv.2306.01531. Zheng Chen , Yan-Pei Cao , Yuan-Chen Guo , Chen Wang , Ying Shan , and Song-Hai Zhang . source | cite | search Stripe Sensitive Convolution for Omnidirectional Image Dehazing IEEE Transactions on Visualization and Computer Graphics. Dong Zhao , Jia Li , Hongyu Li , and Long Xu . source | cite | search Virtual Reality Telepresence: 360-Degree Video Streaming with Edge-Compute Assisted Static Foveated Compression IEEE Transactions on Visualization and Computer Graphics. Xincheng Huang , James Riddell , and Robert Xiao . source | cite | search